Data and analytics always seems to start with the same problem. How do get the data where it’s needed so that we can start getting insights? The problem isn’t getting the data from point A to B, but doing this in a way that is easy, cost-effective, reliable, and appropriately scalable for the use case. With the rise of the different cloud providers and their toolsets, I thought it would be fun to give a swing at implementing a serverless, JSON based approach using AWS.

This will be series of articles which will be broken down into the following:

- Architecture: What’s the approach?

- Development Process: How did I set up my environment that was effective and efficient for developing?

- Difficulties: What issues came up, and how did they get resolved?

- End results: Does this architecture achieve the goals that it set out to achieve?

Diving into the architecture plan is outlined below. We’ll go into each of the boxes in detail, but first let’s frame the use case for this project:

I want a solution that can be used in my personal data projects, can scale up to N data ingestion pipelines as needed, and is cheap to operate.

With that goal in mind, the solution uses technologies that support these objectives:

- Scalability: All of these technologies can scale from gigabytes to terabytes of data automatically, being fully managed services. Additionally, the Lambda python functions that have been written are entirely serverless.

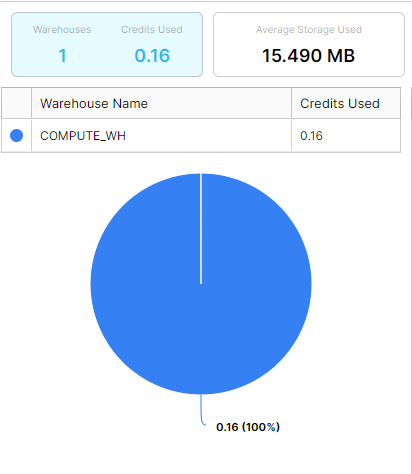

- Cost: Cost is all based upon usage. So if nothing is used, all I’m paying for is storage costs for the storage of persistent data. DynamoDB’s on-demand capacity based pricing charges $.25 per a Gb, so using this service as a landing location before moving into Snowflake is extremely affordable considering the budget.

- Upkeep/maintenance: Everything but the data layer is server-less, so no EC2 to keep up. No patching or server status’ needing to monitored. Or the worst case, no script kitties entering into an unprotected servers in my VPC that require me to start over from scratch.

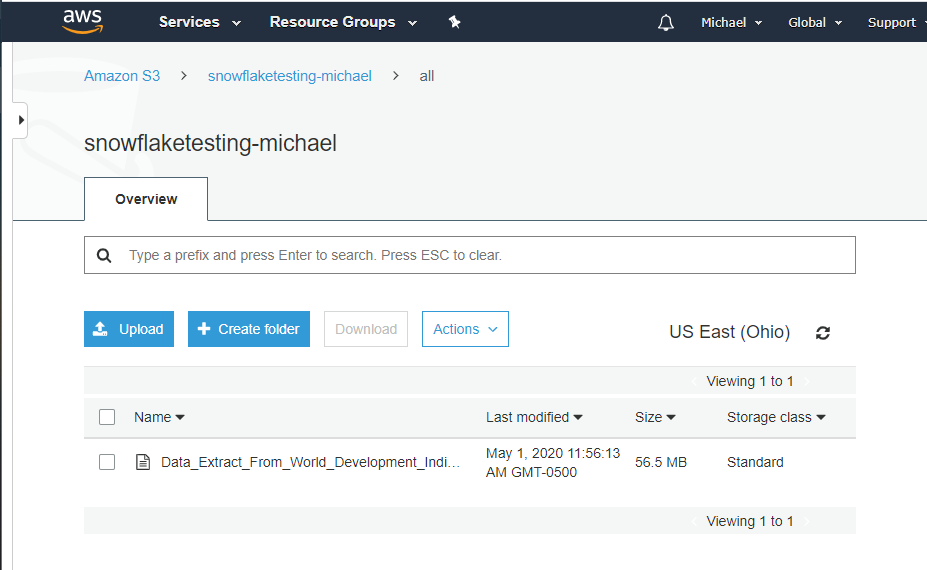

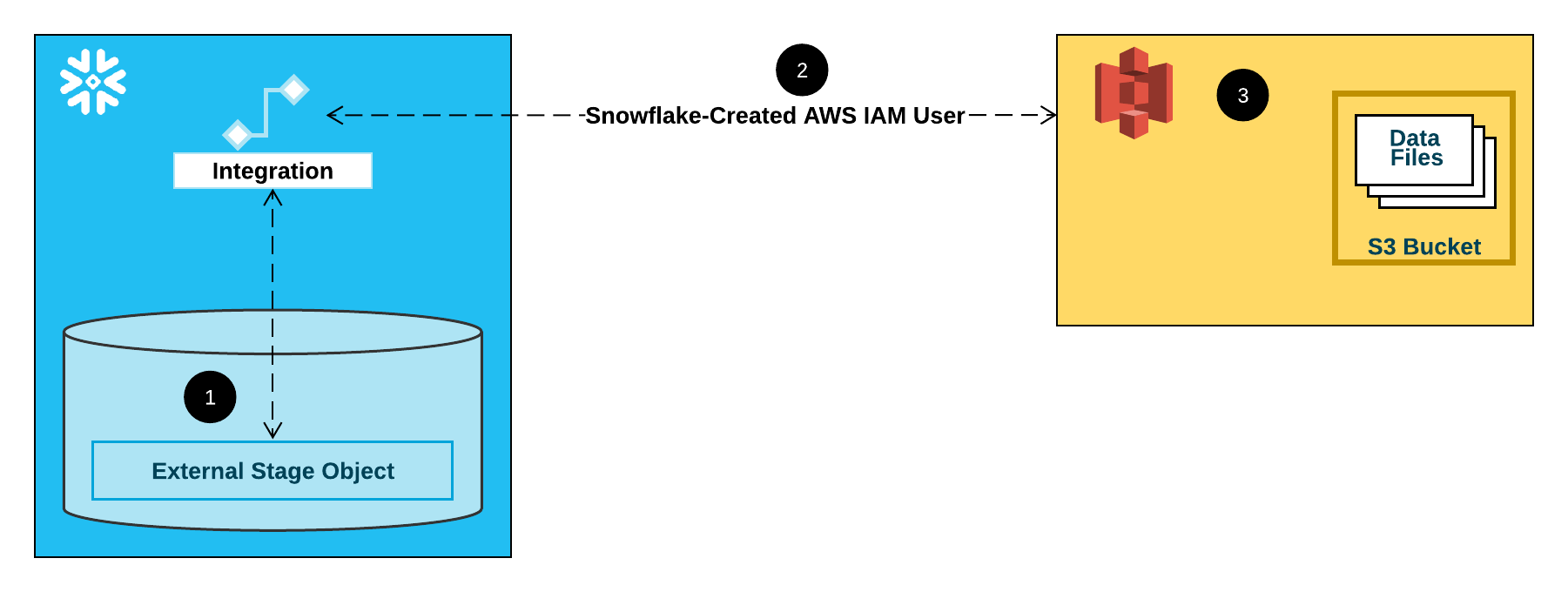

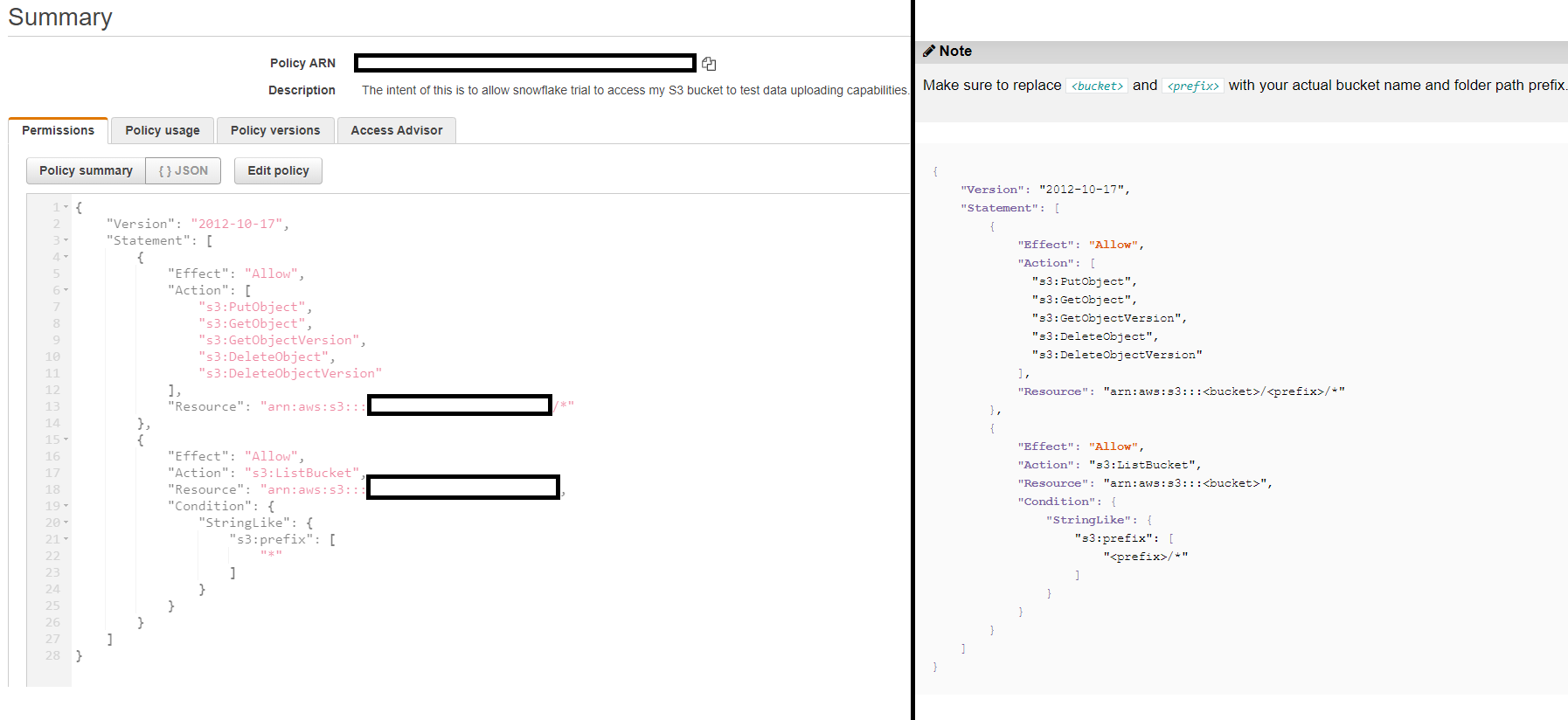

So pretty straightforward from an overall technology standpoint right? The other item to note is how the Lambda functions are written in the python. The idea behind the S3 bucket structure is to funnel all of the data for ingestion into a single location, and ensure that the data is in a similar format to be landed in Dynamo DB.

With the Lambda functions in the GitHub repo here, we ensure that there is a key present that uniquely identifies the exact upload record and it’s origination so I can reuse the upload process for as many different feeds as we want, from whatever buckets we want. Completely configurable to point to a bucket you own, or someone else’s bucket, you can and land it in your own bucket.

Here’s one of the functions demonstrating a super straight forward movement/copy function to get our data to a single ingestion bucket:

# Read data file from S3 location

# Unpack/Unzip into JSON

# Load to landing bucket location

def copy_object(self,source_bucket,object_key,target_bucket):

target_object = object_key + str(time.time())

copy_source = {

'Bucket' : source_bucket,

'Key' : object_key

}

s3 = boto3.resource('s3')

landing_bucket = s3.Bucket(target_bucket)

try:

landing_bucket.copy(copy_source, target_object)

except Exception as ex:

print(ex)

else:

print('Success! Object loaded to: ' + target_object)

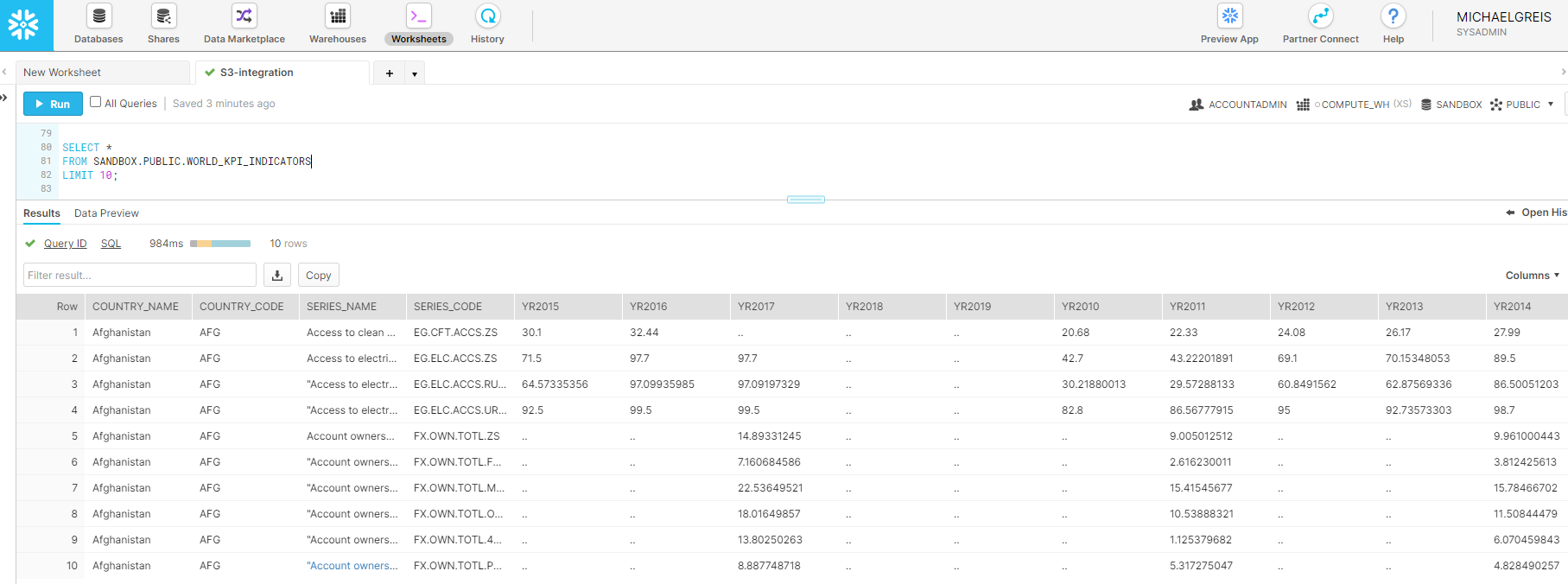

return (target_object)After this, it’s a matter of moving the data along the layers with our Lambda functions, manipulating the data as necessary, and ending up with that data inside of DynamoDB. The idea here being, if we build out the required functions in Lambda, these core python classes used in the Lambda functions to load the data for as many sources as we want, as long as they are similar.

As an example, do you have customer data being sent from many different sources, slightly differently? Well we can get that data into a single DynamoDB table to load into our relational Snowflake database for analytics, or access the data directly using DynamoDB’s API. All of the data in this example is landed in a single table, and can be identified by source for individual processing/analytics.

Although this all sounds straightforward, developing this architecture was truly easier than other side projects/tinkering I’ve done due to the tools that are available to develop Lambda functions and interact with AWS infrastructure. In the next section I’ll talk about the tools I used, how code was deployed, and few other relevant items that made all of this easier to do than expected.